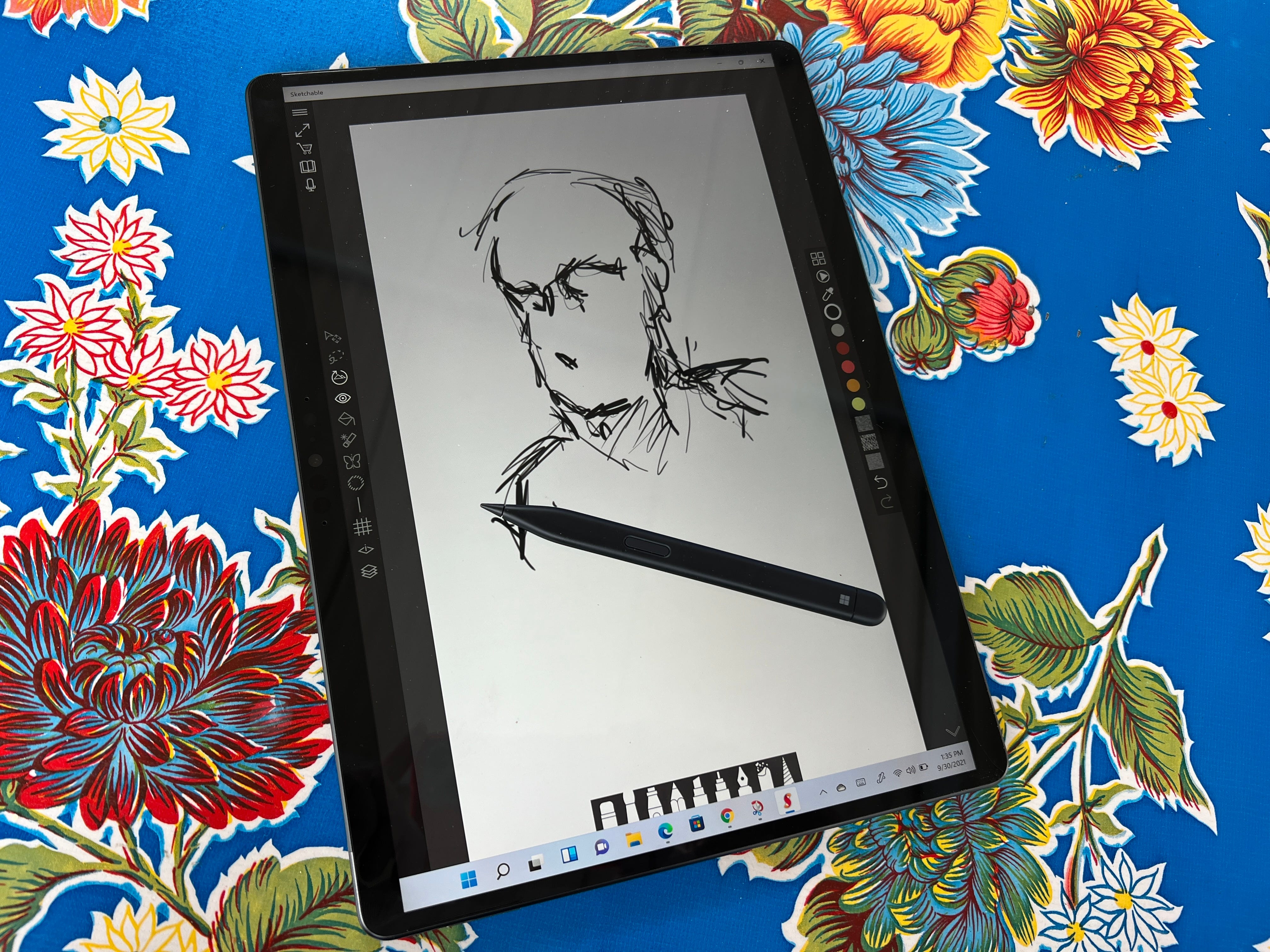

What are Nvidia G-Sync and AMD FreeSync and which do I need?

There are many ways to compensate for the disconnect between screen updates and gameplay frame rate, ranging from the brute force method of simply capping your game's frame rate to match your monitor's refresh rate to the more intelligent realm of variable refresh rate. VRR enables the two to sync to prevent artifacts like tearing (where it looks like parts of different screens are mixed together) and stutter (where the screen updates at perceptibly irregular intervals). These efforts range from basic in-game frame rate control to pricey hardware-based implementations like Nvidia G-Sync Ultimate and AMD FreeSync Premium.

Which do you want?

When picking a monitor, which VRR system to look for comes down to which graphics card you own -- especially now when you can't really buy a new GPU -- and which games you play, plus the monitor specs and choices available. G-Sync and G-Sync Ultimate and FreeSync Premium and Pro are mutually exclusive; you'll rarely (if ever) see variations of the same monitor with options for both. In other words, every other decision you make pretty much determines which VRR scheme you get.

Basic VRR

Basic VRR enables games to use their own methods of syncing the two rates, which on the PC frequently means the game just caps the frame rate it will allow. One step up from that is generic adaptive refresh rate, which uses extended system-level technologies to vary the screen update rate based on the frame rate coming out of the game. This can deliver a better result than plain VRR as long as your frame rates aren't all over the place within a short span of time.

G-Sync Compatible and FreeSync

In the bottom tier of Nvidia and AMD's VRR technologies you'll find improved versions of adaptive refresh, branded G-Sync Compatible and FreeSync. They use the GPU's hardware to improve VRR performance, but they're hardware technologies that are common to both Nvidia and AMD GPUs, which means you can use either supported by the monitor, provided one manufacturer's graphics card driver allows you to enable it for the other manufacturer's cards. Unlike FreeSync, though, G-Sync Compatible implies Nvidia has tested the monitor for an "approved" level of artifact reduction.

G-Sync and FreeSync Premium

The first serious levels of hardware-based adaptive refresh are G-Sync and FreeSync Premium. They both require manufacturer-specific hardware in the monitor that works in conjunction with their respective GPUs in order to apply more advanced algorithms, such as low-frame rate compensation (AMD) or variable overdrive (Nvidia) for better results with less performance overhead. They also have base thresholds for monitor specs that meet appropriate criteria. G-Sync still only works over a DisplayPort connection for monitors because it uses DisplayPort's Adaptive Sync, however, which is frustrating because it does work over HDMI for some TVs.

At CES 2022, Nvidia launched its next-generation 1440p G-sync Esports standard with Reflex Latency Analyzer (Nvidia's technology for minimizing lag of the combined keyboard, mouse and display) and a 25-inch mode that can simulate that size display on a larger monitor. Normalizing high-quality 1440p 27-inch displays for esports is a great step up from 1080p and 25 inches. The initial monitors which will be supporting it (the ViewSonic Elite XG271QG, AOC Agon Pro AG274QGM, MSI MEG 271Q, all with a 300Hz refresh rate, and the Asus ROG Swift 360Hz PG27AQN) haven't shipped yet.

(Mini rant: This name scheme would make a monitor "G-Sync Compatible-compatible," so you'll see the base capability referred to as a "G-Sync Compatible monitor." That's seriously misleading, because that means you're frequently called on to distinguish between uppercase and lowercase: G-Sync Compatible is not the same as G-Sync-compatible.)

G-Sync Ultimate and FreeSync Premium Pro

At the top of the VRR food chain are G-Sync Ultimate and FreeSync Premium Pro. They both require a complete ecosystem of support -- game and monitor in addition to the GPU -- and primarily add HDR optimization in addition to further VRR-based compensation algorithms.

The hardware-based options tend to add to the price of a monitor, and whether or not you need or want them really depends upon the games you play -- if your games don't support these technologies it's kind of pointless to pay extra for them -- how sensitive you are to artifacts and how bad the disconnect is between your display and the gameplay.

Source